“Deplatforming” refers to the banning of individuals, communities, or entire websites that spread misinformation or hate speech. Social media platforms implement this practice to reduce harmful content, but its effectiveness is debatable.

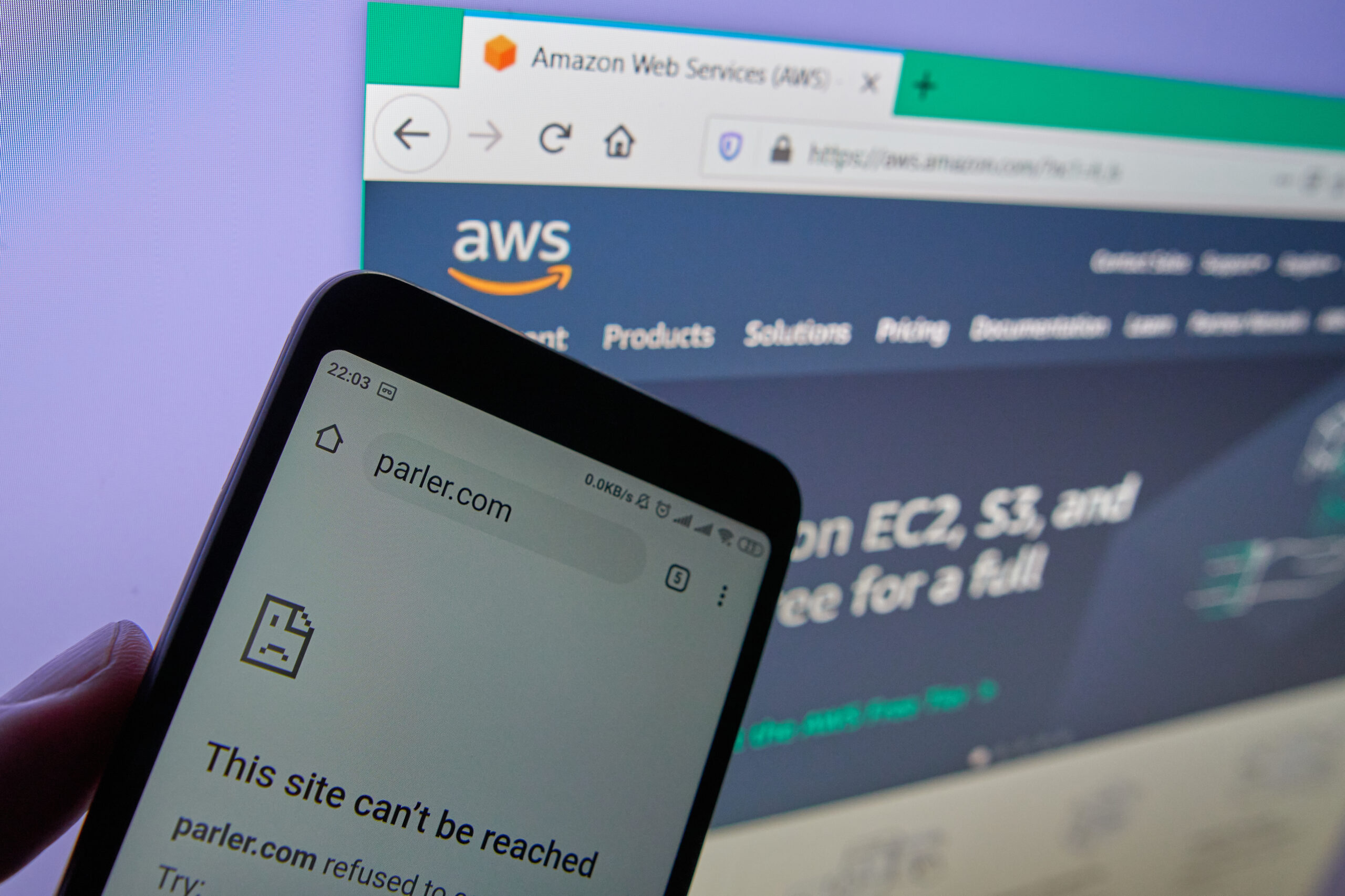

Researchers from the Computational Social Science Lab at the University of Pennsylvania and the School of Computer and Communication Sciences at École Polytechnique Fédérale de Lausanne conducted a study, published in PNAS Nexus to understand the impact of deplatforming on the social media site Parler. Parler is an example of a “fringe” site, meaning that it is less heavily moderated than that of mainstream sites such as Twitter, YouTube, and Facebook. Parler, which is also associated with far-right extremists, was suspended by its host, Amazon Web Services, shortly after the January 6 2021 United States Capitol attack due to its use by insurrectionists for apparent planning of the attack.

A limitation of existing studies of deplatforming is that they do not consider all the existing platforms that users can migrate to. Moreover, they also neglect to track “active” and “passive” social media use, which potentially underestimates the passive consumption of the harmful contents. To address these shortcomings, the researchers used desktop and mobile user activity data provided by the Nielson company, which tracks online consumption habits of users, focusing on the time period before (December 2020) and after (January 11 2021- February 25 2021) the Capitol Riots. They then used a “difference in differences” (DiD) model to estimate the causal effect of the deplatforming on the total activity on Parler as well as other fringe sites such as Rumble and Gab.

The authors observed that deplatforming decreased harmful activity on Parler, but this effect was almost exactly offset by an increase in activity on other fringe sites to which Parler users migrated. Overall, therefore, the deplatforming action caused no significant reduction in fringe activity, corroborating previous studies which suggest that online hate groups are quick to recover from interventions when only a small part of their media “ecosystem” is disrupted.

These findings highlight the need to think about enforcement actions such as deplatforming from a multi-platform perspective. That is, actions that appear to be effective when evaluated on a single platform may simply drive the undesirable behavior elsewhere where potentially it may be less visible or more dangerous. To prevent the fringe content from propagating in the media ecosystem, proactive enforcement actions may have to be coordinated across multiple sites simultaneously.

The full paper can be read here.

AUTHORS

DELPHINE GARDINER

—

Communications Specialist