Research

Penn Media Accountability Project (PennMAP)

PennMAP is building technology to detect patterns of bias and misinformation in media from across the political spectrum and spanning television, ratio, social media, and the broader web. We will also track consumption of information via television, desktop computers, and mobile devices, as well as its effects on individual and collective beliefs and understanding. In collaboration with our data partners, we are also building a scaleable data infrastructure to ingest, process, and analyze tens of terabytes of television, radio, and web content, as well as representative panels of roughly 100,000 media consumers over several years.

COVID – Philadelphia

Our team is building a collection of interactive data dashboards that visually summarize human mobility patterns over time and space for a collection of cities, starting with Philadelphia, along with highlighting potentially relevant demographic correlates. We are estimating a series of statistical models to identify correlations between demographic and human mobility data (e.g. does age, race, gender, income level predict social distancing metrics?) and are using mobility and demographic data to train epidemiological models designed to predict the impact of policies around reopening and vaccination.

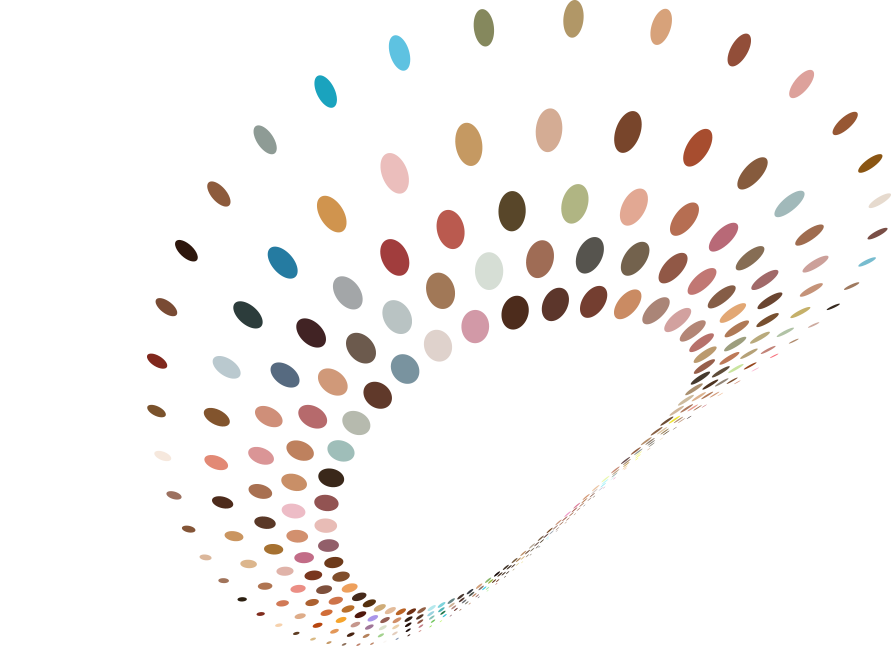

High-Throughput Experiments on Group Dynamics

To achieve replicable, generalizable, scalable, and ultimately useful social science, we believe that is necessary to rethink the fundamental “one at a time” paradigm of experimental social and behavioral science. In its place we intend to design and run “high-throughput” experiments that are radically different in scale and scope from the traditional model. This approach opens the door to new experimental insights, as well as new approaches to theory building.

Common Sense

This project tackles the definitional conundrum of common sense head-on via a massive online survey experiment. Participants are asked to rate thousands of statements, spanning a wide range of knowledge domains, in terms of both their own agreement with the statement and their belief about the agreement of others. Our team has developed novel methods to extract statements from several diverse sources, including appearances in mass media, non-fiction books, and political campaign emails, as well as statements elicited from human respondents and generated by AI systems. We have also developed new taxonomies to classify statements by domain and type.

News

How cable news has diverged from broadcast news

Walter Cronkite was often cited as “the most trusted man in America” as he delivered the news on CBS in the 1960s and ’70s—a time when fewer news options created a “shared reality” that scholars argue fostered civic engagement, empathy, and shared national identity. The situation looks quite different in today’s disparate media landscape.

Duncan Watts Presents Fake News, Echo Chambers, and Algorithms at Penn Engineering ASSET Seminar

Computational Social Science Lab (CSSLab) Director Duncan Watts presented: “Fake News, Echo Chambers, and Algorithms: A Data Science Perspective” at an ASSET(AI-enabled Systems: Safety, Explainability and Trustworthiness) Weekly Seminar on April 23, 2025.

Researcher Spotlight: CSSLab Members Present Work at Annenberg Workshop

Annenberg PhD student Neil Fasching and Postdoctoral researcher Jennifer Allen of the Computational Social Science Lab (CSSLab) recently presented their research on April 15th at the 2nd annual Center for Information Network Democracy Workshop: The Impact of AI on (Mis)Information. CIND is an initiative led by Annenberg faculty members Sandra González-Bailón and Yphtach Lelkes, focused on the impact of information ecosystems on democratic participation in the digital age.