Project Ratio

“Fake news,” broadly defined as false or misleading information masquerading as legitimate news, is frequently asserted to be pervasive online with serious consequences for democracy. The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. Particularly, since the 2016 US presidential election, the deliberate spread of misinformation on social media has generated extraordinary concern, in large part because of its potential effects on public opinion, political polarization, and ultimately democratic decision making. Inspired by “solution-oriented research”, the project Ratio aims to foster a news ecosystem and culture that values and promotes authenticity and truth.

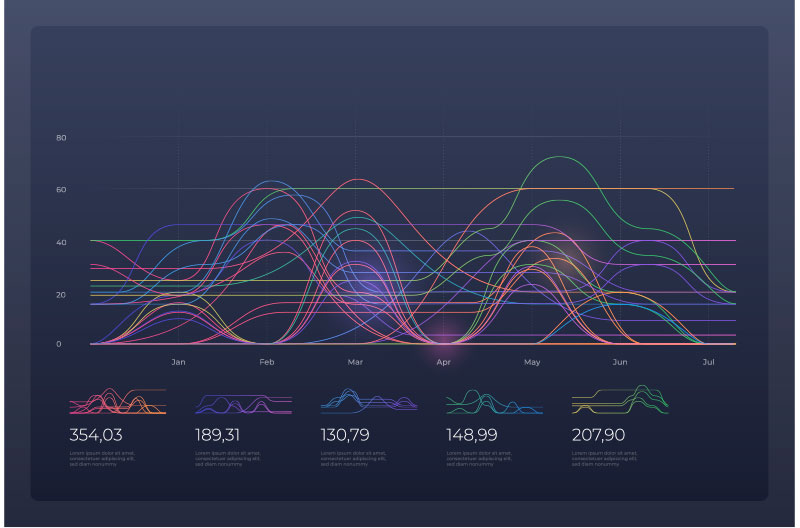

However, proper understanding of misinformation and its effects requires a much broader view of the problem, encompassing biased and misleading–but not necessarily factually incorrect–information that is routinely produced or amplified by mainstream news organizations. Much remains unknown regarding the vulnerabilities of individuals, institutions, and society to manipulations by malicious actors. Project Ratio measures the origins, nature, and prevalence of misinformation, broadly construed, as well as its impact on democracy. We strive for objective and credible information, providing a first-of-its-kind at scale, real-time, cross-platform mapping of news content, as it moves through the “information funnel,” from news production, through distribution and discovery, consumption, and absorption.

%

Before the 2016 Election

%

After the 2016 election

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Aliquam tincidunt nunc ut hendrerit volutpat. Duis auctor scelerisque neque, eget condimentum enim semper vitae.

KEY RESEARCHERS

Duncan Watts

Stevens University Professor & twenty-third Penn Integrates Knowledge Professor

David Rothschild

Research Scientist @ Microsoft

Homa Hosseinmardi

Research Scientist

![]() PUBLICATIONS

PUBLICATIONS

Rebuilding legitimacy in a post-truth age

Duncan J. Watts and David Rothschild.

The current state of public and political discourse is in disarray. Outright fake news stories circulate on social media. The result has been a called a post-truth age, in which evidence, scientific understanding, or even just logical consistency have become increasingly irrelevant to political argumentation.

Don’t blame the election on fake news. Blame it on the media.

Duncan J. Watts and David Rothschild.

Since the 2016 presidential election, an increasingly familiar narrative has emerged concerning the unexpected victory of Donald Trump. Fake news, was amplified on social networks. We believe that the volume of reporting around fake news, and the role of tech companies in disseminating those falsehoods, is both disproportionate to its likely influence in the outcome of the election and diverts attention from the culpability of the mainstream media itself.

The science of fake news

David M. J. Lazer, Matthew A. Baum, Yochai Benkler, Adam J. Berinsky, Kelly M. Greenhill, Filippo Menczer, Miriam J. Metzger, Brendan Nyhan, Gordon Pennycook, David Rothschild, Michael Schudson, Steven A. Sloman, Cass R. Sunstein, Emily A. Thorson, Duncan J. Watts and Jonathan L. Zittrain.

The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. We discuss extant social and computer science research regarding belief in fake news and the mechanisms by which it spreads.

![]() DATA

DATA

DATA OVERVIEW

The burgeoning and rise of big data results in salience of the quantity of data, nourishing the soil for qualitative research and analysis, addressing social, economic, cultural and ethical implications and issues of social science. Converging computer science and social science, the project Ratio suggests use-inspired intellectual research style and data-driven methodological directions for computational social science, yielding a diversity of perspectives on explanation, understanding, and prediction of information flow and impact. Collaborating with various data providers, currently including Nielsen, PeakMetric, TVEyes and Harmony Labs, we seek to establish a large-scale data infrastructure for studying the production, distribution, consumption, absorption in the information ecosystem, illuminating each aspect of research on “fake news” in-depth and in-width.

Duncan Watts and CSSLab’s New Media Bias Detector

The 2024 U.S. presidential debates kicked off June 27, with President Joe Biden and former President Donald Trump sharing the stage for the first time in four years. Duncan Watts, a computational social scientist from the University of Pennsylvania, considers this an ideal moment to test a tool his lab has been developing during the last six months: the Media Bias Detector.

“The debates offer a real-time, high-stakes environment to observe and analyze how media outlets present and potentially skew the same event,” says Watts, a Penn Integrates Knowledge Professor with appointments in the Annenberg School for Communication, School of Engineering and Applied Science, and Wharton School. “We wanted to equip regular people with a powerful, useful resource to better understand how major events, like this election, are being reported on.”

What Public Discourse Gets Wrong About Misinformation Online

Researchers at the Computational Social Science Lab (CSSLab) at the University of Pennsylvania, led by Stevens University Professor Duncan Watts, study Americans’ news consumption. In a new article in Nature, Watts, along with David Rothschild of Microsoft Research (Wharton Ph.D. ‘11 and PI in the CSSLab), Ceren Budak of the University of Michigan, Brendan Nyhan of Dartmouth College, and Annenberg alumnus Emily Thorson (Ph.D. ’13) of Syracuse University, review years of behavioral science research on exposure to false and radical content online and find that exposure to harmful and false information on social media is minimal to all but the most extreme people, despite a media narrative that claims the opposite.

Mapping Media Bias: How AI Powers the Computational Social Science Lab’s Media Bias Detector

Every day, American news outlets collectively publish thousands of articles. In 2016, according to The Atlantic, The Washington Post published 500 pieces of content per day; The New York Times and The Wall Street Journal more than 200. “We’re all consumers of the media,” says Duncan Watts, Stevens University Professor in Computer and Information Science. “We’re all influenced by what we consume there, and by what we do not consume there.”

Mapping How People Get Their (Political) News

New data visualizations from the Computational Social Science Lab show how Americans consume news....

The CSSLab Launches News Consumption Dashboard

The Computational Social Sciences Lab’s (CSSLab) new dashboard, Mapping the (Political) Information Ecosystem, is a set of four data visualizations that highlight Americans’ media consumption habits, with a focus on echo chambers and the news. This is the second of a series of dashboards launched by the CSSLab, as part of its PennMAP (Penn Media Accountability) project, and focuses on expanding knowledge on the media by translating research papers into interactive, digestible content.

Misleading COVID-19 headlines from mainstream sources did more harm on Facebook than fake news

CAMBRIDGE, Mass., May 30, 2024 – Since the rollout of the COVID-19 vaccine in 2021, fake news on social media has been widely blamed for low vaccine uptake in the United States — but research by MIT Sloan School of Management PhD candidate Jennifer Allen and Professor David Rand finds that the blame lies elsewhere.

Reexamining Misinformation: How Unflagged, Factual Content Drives Vaccine Hesitancy

Factual, vaccine-skeptical content on Facebook has a greater overall effect than “fake news,”...

Homa Hosseinmardi and Sam Wolken Speak at Annenberg Workshop

Homa Hosseinmardi and Sam Wolken of the Computational Social Science Lab (CSSLab) were recently invited to speak at the Political and Information Networks Workshop on April 25-26. This workshop was organized by the Center for Information Networks and Democracy (CIND), a new lab under the Annenberg School of Communication. CIND studies how communication networks in the digital era play a role in democratic processes, and its research areas include Information Ecosystems and Political Segregation (or Partisan Segregation).

Estimating the Effect of YouTube Recommendations with Homa Hosseinmardi

In Analytics at Wharton’s Research Spotlight series, we highlight research by Wharton and...

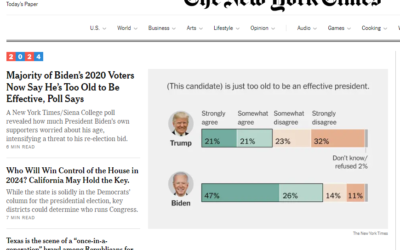

Joe Biden’s (but not Donald Trump’s) age: A case study in the New York Times’ inconsistent narrative selection and framing

On the weekend of March 2-3, 2024, the landing page of the New York Times was dominated by coverage of their poll showing voter concern over President Biden’s age. There was a lot of concern among Democrats about the methods of the poll, especially around the low response rate and leading questions. But as a team of researchers who study both survey methods and mainstream media, we are not surprised that people are telling pollsters they are worried about Biden’s age. Why wouldn’t they? The mainstream media has been telling them to be worried about precisely this issue for months.