Project Ratio

“Fake news,” broadly defined as false or misleading information masquerading as legitimate news, is frequently asserted to be pervasive online with serious consequences for democracy. The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. Particularly, since the 2016 US presidential election, the deliberate spread of misinformation on social media has generated extraordinary concern, in large part because of its potential effects on public opinion, political polarization, and ultimately democratic decision making. Inspired by “solution-oriented research”, the project Ratio aims to foster a news ecosystem and culture that values and promotes authenticity and truth.

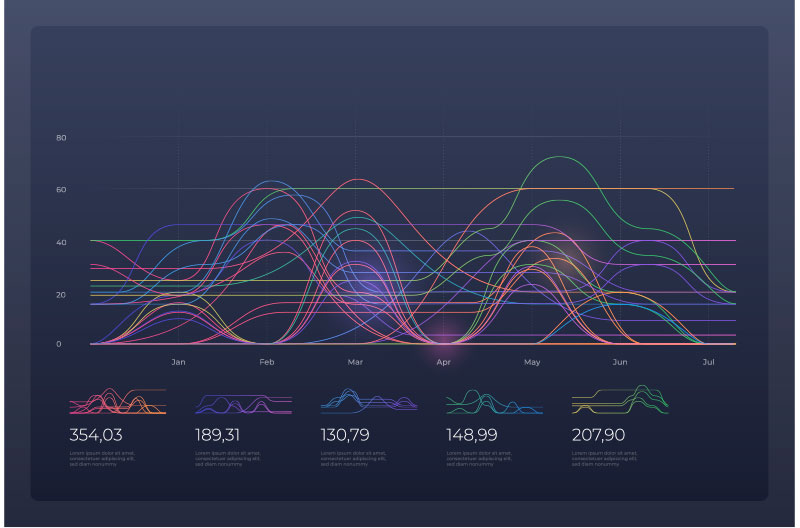

However, proper understanding of misinformation and its effects requires a much broader view of the problem, encompassing biased and misleading–but not necessarily factually incorrect–information that is routinely produced or amplified by mainstream news organizations. Much remains unknown regarding the vulnerabilities of individuals, institutions, and society to manipulations by malicious actors. Project Ratio measures the origins, nature, and prevalence of misinformation, broadly construed, as well as its impact on democracy. We strive for objective and credible information, providing a first-of-its-kind at scale, real-time, cross-platform mapping of news content, as it moves through the “information funnel,” from news production, through distribution and discovery, consumption, and absorption.

%

Before the 2016 Election

%

After the 2016 election

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Aliquam tincidunt nunc ut hendrerit volutpat. Duis auctor scelerisque neque, eget condimentum enim semper vitae.

KEY RESEARCHERS

Duncan Watts

Stevens University Professor & twenty-third Penn Integrates Knowledge Professor

David Rothschild

Research Scientist @ Microsoft

Homa Hosseinmardi

Research Scientist

![]() PUBLICATIONS

PUBLICATIONS

Rebuilding legitimacy in a post-truth age

Duncan J. Watts and David Rothschild.

The current state of public and political discourse is in disarray. Outright fake news stories circulate on social media. The result has been a called a post-truth age, in which evidence, scientific understanding, or even just logical consistency have become increasingly irrelevant to political argumentation.

Don’t blame the election on fake news. Blame it on the media.

Duncan J. Watts and David Rothschild.

Since the 2016 presidential election, an increasingly familiar narrative has emerged concerning the unexpected victory of Donald Trump. Fake news, was amplified on social networks. We believe that the volume of reporting around fake news, and the role of tech companies in disseminating those falsehoods, is both disproportionate to its likely influence in the outcome of the election and diverts attention from the culpability of the mainstream media itself.

The science of fake news

David M. J. Lazer, Matthew A. Baum, Yochai Benkler, Adam J. Berinsky, Kelly M. Greenhill, Filippo Menczer, Miriam J. Metzger, Brendan Nyhan, Gordon Pennycook, David Rothschild, Michael Schudson, Steven A. Sloman, Cass R. Sunstein, Emily A. Thorson, Duncan J. Watts and Jonathan L. Zittrain.

The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. We discuss extant social and computer science research regarding belief in fake news and the mechanisms by which it spreads.

![]() DATA

DATA

DATA OVERVIEW

The burgeoning and rise of big data results in salience of the quantity of data, nourishing the soil for qualitative research and analysis, addressing social, economic, cultural and ethical implications and issues of social science. Converging computer science and social science, the project Ratio suggests use-inspired intellectual research style and data-driven methodological directions for computational social science, yielding a diversity of perspectives on explanation, understanding, and prediction of information flow and impact. Collaborating with various data providers, currently including Nielsen, PeakMetric, TVEyes and Harmony Labs, we seek to establish a large-scale data infrastructure for studying the production, distribution, consumption, absorption in the information ecosystem, illuminating each aspect of research on “fake news” in-depth and in-width.

Grumpy Voters Want Better Stories. Not Statistics

In the final count, Trump collected 312 electoral votes to 226 for Democratic nominee Kamala Harris. While some votes are still being counted, the broad trends that won the election for Trump are also coming into focus. Echoing public opinion scholars, Duncan Watts of the University of Pennsylvania’s Annenberg School for Communication, author of Everything Is Obvious Once You Know the Answer, believes that Trump benefited from a broad anti-incumbent trend seen in elections worldwide; that sentiment swung enough undecided voters to his tally to win him the swing states needed for victory.

Commonsensicality: A New Platform to Measure Your Common Sense

Most of us believe that we possess common sense; however, we find it challenging to articulate which of our beliefs are commonsensical or how “common” we think they are. Now, the CSSLab invites participants to measure their own level of common sense by taking a survey on a new platform, The common sense project.

Since its launch, the project has received significant media attention; it was recently featured in The Independent, The Guardian, and New Scientist, attracting over 100,000 visitors to the platform just this past week.

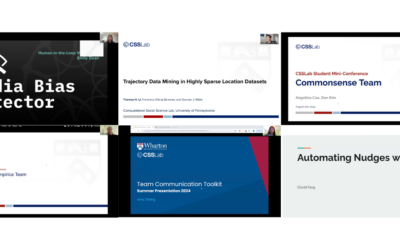

CSSLab 2024 End-of-Summer Research Seminar Recap

On August 2nd, ten undergraduate and Master’s students showcased their research at the third annual Student Research Mini-Conference, which featured presentations from all four major research groups at the Computational Social Science Lab (CSSLab) at Penn: PennMAP, COVID-Philadelphia/Human Mobility, Group Dynamics, and Common Sense. Here are the highlights from this conference:

CSSLab Establishes Virtual Deliberation Lab to Reduce Affective Polarization

When a Republican and a Democrat sit down to discuss gun control, how is it going to go? Conversations between Republicans and Democrats can be either productive or polarizing and social scientists want to understand what makes conversations between people from competing social groups succeed, as positive conversations have proven to be one of the most effective ways to reduce intergroup conflict. However, when conversations go poorly, they can instead increase polarization and reinforce negative biases.

Accelerating the Path to a Master’s Degree

As a computer science major at Penn Engineering, Mahika Calyanakoti ’26 enjoyed her courses in data science, math, and machine learning. So when she decided to pursue an accelerated master’s degree, she chose Penn Engineering’s data science program.

“A lot of computer science undergrads go into the accelerated program in computer science, but I wanted a little more variety in my studies,” she says. “While the CIS master’s is a great program, I felt the Data Science master’s better suited my desire to broaden my academic horizons.”

The mechanics of collaboration

Like many clichés, the origins of the common mantra “Teamwork makes the dream work” is rooted in a shared experience.

For Xinlan Emily Hu, a fourth-year Ph.D. student at Wharton, that is, however, more than just a catchy saying. It is the foundation of her research into the science of teamwork. As she puts it, “The magic of a successful team isn’t just in having the right people; it’s in how those people interact and communicate.”

University of Pennsylvania Launches Penn Center on Media, Technology, and Democracy

The University of Pennsylvania today announced $10 million in funding dedicated to its new Center for Media, Technology, and Democracy. The Center will be housed in the School of Engineering and Applied Science (Penn Engineering) and will operate in partnership with five other schools at Penn.

The Center will benefit from a five-year, $5 million investment from the John S. and James L. Knight Foundation as well as an additional $5 million in combined resources from Penn Engineering, Penn Arts & Sciences, the Annenberg School for Communication, the Wharton School, Penn Carey Law, and the School of Social Policy & Practice.

Detecting Media Bias

When Duncan Watts and his colleagues at Penn’s Computational Social Science Lab began work in January on a new tool that uses artificial intelligence to analyze news articles in the mainstream media, they aimed to release it before the presidential debate on June 27.

“We built the whole thing from scratch in six months—which I’ve never experienced anything like in my academic career,” says Watts, the Stevens University Professor at the Annenberg School for Communication and the director of the Computational Social Science Lab (CSSLab). “It was a huge project, and a lot of people worked relentlessly to get it up.”

The Team Communication Toolkit: Emily Hu’s Award-Winning Project

Emily Hu, a fourth year Wharton Operations, Information, and Decisions PhD student at the Computational Social Science Lab (CSSLab), has just launched her award-winning Team Communication Toolkit at the Academy of Management Conference on August 12 in Chicago. This toolkit allows researchers to analyze text-based communication data among groups and teams by providing over a hundred research-backed conversational features, eliminating the need to compute these features from scratch.

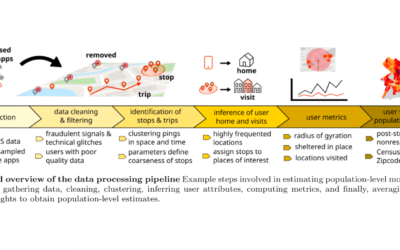

Overcoming the Challenges of GPS Mobility Data in Epidemic Modeling

Epidemic modeling is a framework for evaluating the location and timing of disease transmission events, and is a part of the larger field of human mobility science. The COVID-19 pandemic put existing epidemic models to the test, with many institutions and corporations employing models that utilized smartphone location data to measure human-to-human interactions and better understand potential transmissions and social distancing.