Project Ratio

“Fake news,” broadly defined as false or misleading information masquerading as legitimate news, is frequently asserted to be pervasive online with serious consequences for democracy. The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. Particularly, since the 2016 US presidential election, the deliberate spread of misinformation on social media has generated extraordinary concern, in large part because of its potential effects on public opinion, political polarization, and ultimately democratic decision making. Inspired by “solution-oriented research”, the project Ratio aims to foster a news ecosystem and culture that values and promotes authenticity and truth.

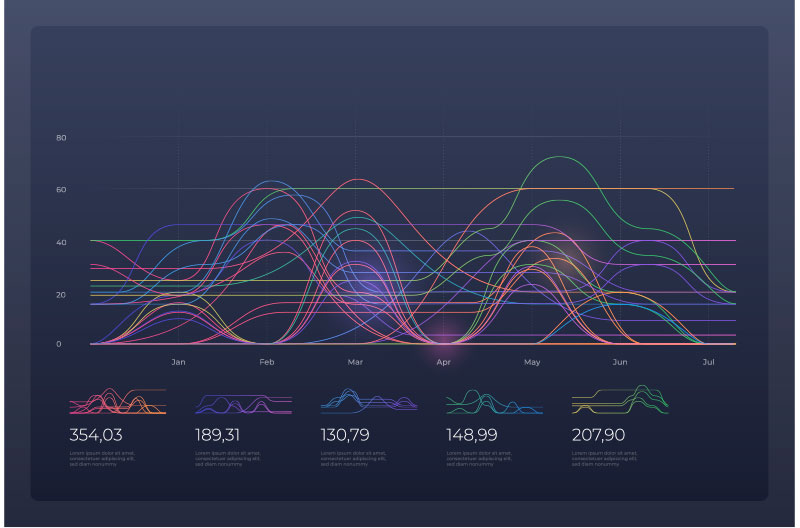

However, proper understanding of misinformation and its effects requires a much broader view of the problem, encompassing biased and misleading–but not necessarily factually incorrect–information that is routinely produced or amplified by mainstream news organizations. Much remains unknown regarding the vulnerabilities of individuals, institutions, and society to manipulations by malicious actors. Project Ratio measures the origins, nature, and prevalence of misinformation, broadly construed, as well as its impact on democracy. We strive for objective and credible information, providing a first-of-its-kind at scale, real-time, cross-platform mapping of news content, as it moves through the “information funnel,” from news production, through distribution and discovery, consumption, and absorption.

%

Before the 2016 Election

%

After the 2016 election

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Aliquam tincidunt nunc ut hendrerit volutpat. Duis auctor scelerisque neque, eget condimentum enim semper vitae.

KEY RESEARCHERS

Duncan Watts

Stevens University Professor & twenty-third Penn Integrates Knowledge Professor

David Rothschild

Research Scientist @ Microsoft

Homa Hosseinmardi

Research Scientist

![]() PUBLICATIONS

PUBLICATIONS

Rebuilding legitimacy in a post-truth age

Duncan J. Watts and David Rothschild.

The current state of public and political discourse is in disarray. Outright fake news stories circulate on social media. The result has been a called a post-truth age, in which evidence, scientific understanding, or even just logical consistency have become increasingly irrelevant to political argumentation.

Don’t blame the election on fake news. Blame it on the media.

Duncan J. Watts and David Rothschild.

Since the 2016 presidential election, an increasingly familiar narrative has emerged concerning the unexpected victory of Donald Trump. Fake news, was amplified on social networks. We believe that the volume of reporting around fake news, and the role of tech companies in disseminating those falsehoods, is both disproportionate to its likely influence in the outcome of the election and diverts attention from the culpability of the mainstream media itself.

The science of fake news

David M. J. Lazer, Matthew A. Baum, Yochai Benkler, Adam J. Berinsky, Kelly M. Greenhill, Filippo Menczer, Miriam J. Metzger, Brendan Nyhan, Gordon Pennycook, David Rothschild, Michael Schudson, Steven A. Sloman, Cass R. Sunstein, Emily A. Thorson, Duncan J. Watts and Jonathan L. Zittrain.

The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. We discuss extant social and computer science research regarding belief in fake news and the mechanisms by which it spreads.

![]() DATA

DATA

DATA OVERVIEW

The burgeoning and rise of big data results in salience of the quantity of data, nourishing the soil for qualitative research and analysis, addressing social, economic, cultural and ethical implications and issues of social science. Converging computer science and social science, the project Ratio suggests use-inspired intellectual research style and data-driven methodological directions for computational social science, yielding a diversity of perspectives on explanation, understanding, and prediction of information flow and impact. Collaborating with various data providers, currently including Nielsen, PeakMetric, TVEyes and Harmony Labs, we seek to establish a large-scale data infrastructure for studying the production, distribution, consumption, absorption in the information ecosystem, illuminating each aspect of research on “fake news” in-depth and in-width.

How cable news has diverged from broadcast news

Walter Cronkite was often cited as “the most trusted man in America” as he delivered the news on CBS in the 1960s and ’70s—a time when fewer news options created a “shared reality” that scholars argue fostered civic engagement, empathy, and shared national identity. The situation looks quite different in today’s disparate media landscape.

Duncan Watts Presents Fake News, Echo Chambers, and Algorithms at Penn Engineering ASSET Seminar

Computational Social Science Lab (CSSLab) Director Duncan Watts presented: “Fake News, Echo Chambers, and Algorithms: A Data Science Perspective” at an ASSET(AI-enabled Systems: Safety, Explainability and Trustworthiness) Weekly Seminar on April 23, 2025.

Researcher Spotlight: CSSLab Members Present Work at Annenberg Workshop

Annenberg PhD student Neil Fasching and Postdoctoral researcher Jennifer Allen of the Computational Social Science Lab (CSSLab) recently presented their research on April 15th at the 2nd annual Center for Information Network Democracy Workshop: The Impact of AI on (Mis)Information. CIND is an initiative led by Annenberg faculty members Sandra González-Bailón and Yphtach Lelkes, focused on the impact of information ecosystems on democratic participation in the digital age.

Can Social Media Be Less Toxic?

In an era where online interactions can be both motivational and toxic, researchers from the Computational Social Science Lab (CSSLab) at the University of Pennsylvania are interested in what encourages prosocial behavior — acts of kindness, support, and cooperation — on social media.

News On Climate Change Is More Persuasive Than Expected, Study Finds

Climate change is one of the most pressing challenges of our time, demanding urgent and effective action to mitigate its severe impacts. One barrier to effective climate change action is its polarizing nature largely driven by the media, as people prefer to consume news that aligns with their political beliefs. This tendency is especially strong among climate skeptics, who are more inclined to seek information that reinforces their views on climate change. In this context, communication—especially on social media—plays a crucial role in bridging cross-partisan boundaries. However, meaningful dialogue may be hampered if individuals do not believe these interactions will be effective.

From Cracks to Gardens: Creating a Thriving Social Media Through Research

Early advocates of social media believed that the creation of these platforms would lead to positive outcomes. When Facebook was launched in 2004, it was praised for its ability to “connect the entire world.” In hindsight, many of these ideals were optimistic at their time as social media platforms are often criticized for spreading hate and misinformation.

New Study Challenges YouTube’s Rabbit Hole Effect on Political Polarization

With 2.1 billion monthly users, YouTube is a major media platform that has become an important component of many Americans’ news diets. Simultaneously, it has garnered a reputation for stoking the flames of political extremism, making it a focal point among researchers studying video-stream platforms and their intersection with political polarization. The mainstream media has also taken interest in extremism on YouTube—New York Times writer and Princeton professor Zeynep Tufekci has argued that YouTube’s recommendation algorithm radicalizes its users by exposing them to increasingly polarizing content.

How the Media Distorts Perceptions on Chronic Disease Risks

Silent illnesses, or chronic diseases, contribute to 70% of deaths in the US annually and six in ten Americans suffer from at least one chronic condition. Despite this, coverage of this public health crisis is disproportionately overshadowed by sensational risks, including terrorism, homicide, and traffic accidents- incidents that are far more likely to grab readers’ attention.

Violent Language in Films Has Increased Since the 1970s: A New Study

Violent entertainment has made it into the public discourse due to rising concerns about the graphic nature of highly popular video game franchises including Grand Theft Auto (GTA) and Call of Duty. But what about violence in films which are enjoyed by a much larger and more diverse audience? After the R Rating was established by the Motion Picture Association of America (MPAA) film rating system in 1968, there was an increase in violent content in films thereafter.

Research Assistant Spotlight: Priya D’Costa Presents at NeurIPS

This past weekend CSSLab alumna Priya DCosta presented a poster titled What do you say or how do you say it? at NeurIPS 2024 Behavioral ML Workshop, a first-time workshop on exploring the incorporation of insights from the behavioral sciences into AI models/systems in Vancouver on December 10-15. In this RA spotlight, Priya shares more on her background and research leading up to NeurIPS.