Originally published on the Empirica blog

Welcome to “Empirica Stories” — a series in which we highlight innovative research from the Empirica community, showcasing the possibilities of virtual lab experiments!

Today, we highlight the work of Eaman Jahani and collaborators on their preprint “Exposure to Common Enemies can Increase Political Polarization: Evidence from a Cooperation Experiment with Automated Partisans.”

Eaman Jahani is a postdoctoral associate at the UC Berkeley Department of Statistics, and completed his PhD at MIT, where his research was focused on micro-level structural factors, such as network structure, that contribute to unequal distribution of resources or information. As a computational social scientist, he uses methods from network science, statistics, experiment design and causal inference. He is also interested in understanding collective behavior in institutional settings, and the institutional mechanisms that promote cooperative behavior in networks or lead to unequal outcomes for different groups.

Tell us about your experiment!

Our group ran an experiment with two “people” in each instance to explore political polarization. In each instance, the network was a simple dyad, and the second person was in fact a bot that reacted to the real human, with the agents interacting for 5 rounds. The human subject and the bot interacted by updating their response to a question after receiving the response submitted by the other player. Overall, we recruited about 1000 subjects into 4 different treatments.

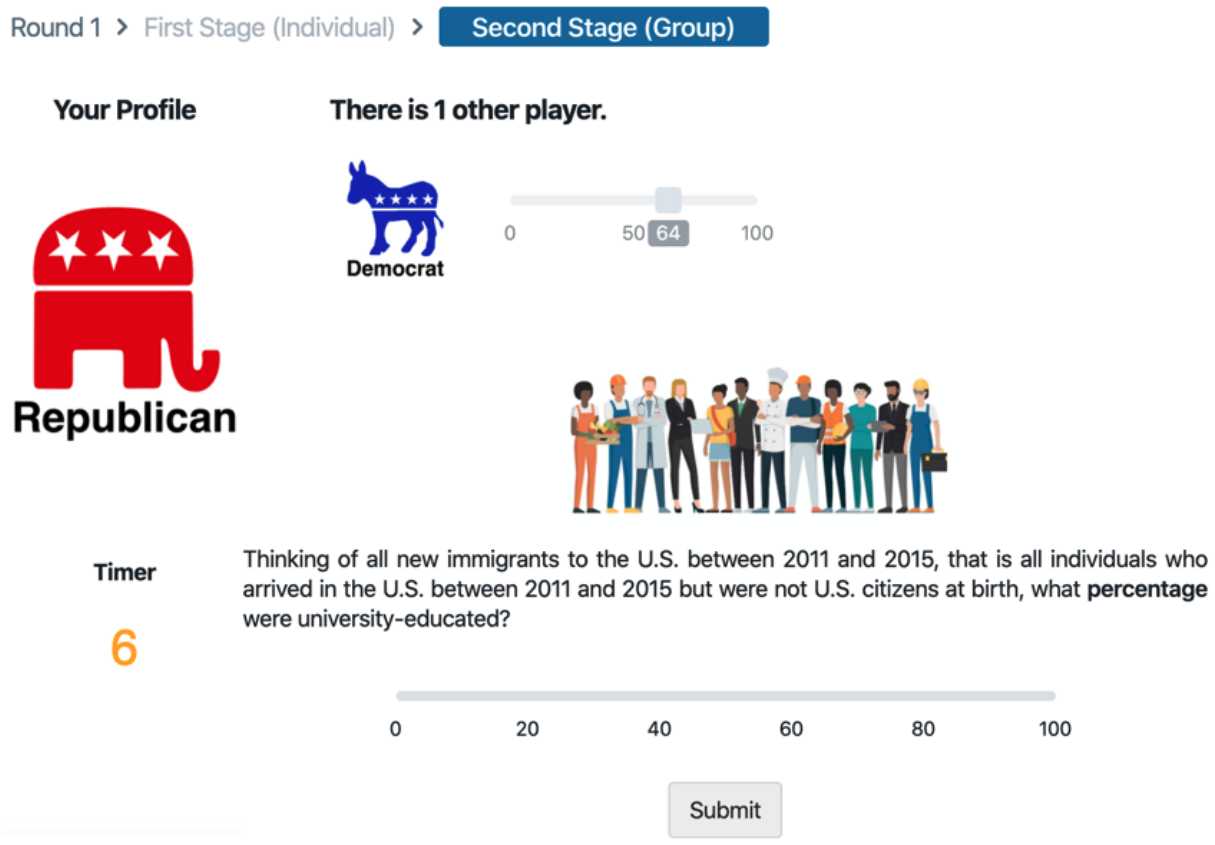

Experimental interface and design: Participants were randomly shown one of three priming articles: (1) “neutral” content on early human carvings in South Africa; (2) “patriotic” content on July 4th celebrations; and (3) “common-enemy” content on the combined threat of Iran, China, and Russia. After reading the article, participants were incentivized to accurately estimate the answer to a question about a political issue (immigration, for example, shown above). After submitting their initial estimate, participants were shown the estimate of a politically-opposed bot (presented as a human), and allowed to revise their estimates.

What parts of Empirica’s functionality made implementing your experimental design particularly easy?

BOTS! Empirica’s flexible bot framework made it easy to program bots that allowed for cleaner interventions.

How much effort did it take to get your experiment up and running? Did you develop it or outsource?

Although I wasn’t very familiar with front-end development, I managed to design the experiments myself. The platform design was very intuitive and the callbacks were clearly explained, making it easy to get up to speed with experiment design. At the time, I believe I was one of the first few users and there was not much documentation, but the user guide has significantly improved now and it should be much easier for someone new to design an experiment from scratch. That being said, even with limited documentation, the framework was intuitive enough that I was able to successfully implement and launch 2 experiments with no problems.

What value do you think virtual lab experiments can add to your field of research?

Virtual lab experiments enable us to test hypotheses that are nearly impossible and extremely costly to test in the field. Results from Empirica can give us a strong initial understanding of a phenomenon, potentially justifying a follow-up field experiment that is more costly and difficult to implement. The particular setup in which Empirica shines is networked experiments. These experiments can go wrong in so many ways in the field, but here we get to control the environment as much as possible and even create networks with bots. Bots are particularly useful, as they allow us to test counterfactuals that might be rare in reality.

—

Learn more about Empirica by visiting the Empirica website or our Group Dynamics project page.

AUTHORS

ABDULLAH ALMAATOUQ

—

—

Affiliated researcher

Massachusetts Institute of Technology